本文介绍如何在k8s集群中通过filebeat采集日志,然后通过kafka将日志发给logstash,logstash存到elasticsearch,最后再通过kibana进行展示检索。

部署kafka 部署kafka我们通过helm直接部署到k8s集群中,如果是使用的tke集群,直接将应用部署到tke集群中即可。

1 2 helm repo add bitnami https:

创建kafka主题 因为kafka是通过pod启动的,如果没有kafka客户端工具,可以直接登录pod创建主题。

1 2 3 4 5 6 sh kafka-topics.sh --create --zookeeper 10.0 .0.187 :2181 --replication-factor 1 --partitions 3 --topic log-nginx .sh --create --zookeeper 10.0 .0.187 :2181 --replication-factor 1 --partitions 3 --topic log-k8s .sh --delete --zookeeper 10.0 .0.187 :2181 --topic log.sh --zookeeper 10.0 .0.187 :2181 --list .sh --zookeeper 10.0 .0.187 :2181 --describe --topic log.sh --bootstrap-server 10.0 .0.187 :9092 --topic log --from-beginning

这里可以参考上面命令创建主题,还有一些其他常用的命令。

部署filebeat 这里不是filebeat的方式有2种,一种是通过sidecar的方式采集单个业务的日志,还有一种是通过DaemonSet的方式采集所有的容器日志。

sidecar部署filebeat 这里我们通过sidecar的方式部署,采集下nginx日志,具体部署可以参考文档https://www.niewx.cn/mybook/k8s/userd-filebeat-as-sidecar-collect-log.html

这里我们需要改动下filebeat的配置,文档中是投递到es,现在我们要投递到kafka。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 apiVersion : v1 data : filebeat.yml : |- filebeat.inputs : - type: log paths : - /var/log/nginx/access.log fields : app : www type : nginx-access fields_under_root : true setup.ilm.enabled : false setup.template.name : "nginx-access" setup.template.pattern : "nginx-access-*" output : kafka : enabled : true hosts : ["10.0.0.187:9092"] topic : log-nginx max_message_bytes : 5242880 partition.round_robin : reachable_only : true keep-alive : 120 required_acks : 1 kind : ConfigMap metadata : name : filebeat-nginx-config namespace : log

DaemonSet部署filebeat 通过DaemonSet的方式部署,采集的是节点容器日志目录,具体的yaml配置如下。

configmap的配置如下,filebeat.autodiscover是filebeat的自动发现功能,对于满足条件的,进行特殊的配置处理,具体的配置方式可以参考官网文档https://www.elastic.co/guide/en/beats/filebeat/current/configuration-autodiscover.html

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 apiVersion : v1 data : filebeat.yml : |- filebeat.autodiscover : providers : - type: kubernetes host : ${NODE_NAME} hints.enabled : true templates : - condition: equals : kubernetes.namespace : tke-test - condition: equals : kubernetes.container.name : nginx config : - type: docker paths : - /var/log/containers/*-${data.kubernetes.container.id}.log processors : - add_cloud_metadata: - add_host_metadata: cloud.id : ${ELASTIC_CLOUD_ID} cloud.auth : ${ELASTIC_CLOUD_AUTH} output : kafka : enabled : true hosts : ["10.0.0.187:9092"] topic : log-k8s max_message_bytes : 5242880 partition.round_robin : reachable_only : true keep-alive : 120 required_acks : 1 kind : ConfigMap metadata : labels : k8s-app : filebeat name : filebeat-config namespace : log

DaemonSet的yaml参考下面

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 apiVersion: apps/v1 kind: DaemonSet metadata: labels: k8s-app: filebeat name: filebeat namespace: log spec: selector: matchLabels: k8s-app: filebeat template: metadata: labels: k8s-app: filebeat spec: containers: - args: - -c - /etc/filebeat.yml - -e env: - name: NODE_NAME valueFrom: fieldRef: apiVersion: v1 fieldPath: spec.nodeName image: elastic/filebeat:7.4.2 imagePullPolicy: IfNotPresent name: filebeat resources: {}securityContext: runAsUser: 0 terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /etc/filebeat.yml name: config readOnly: true subPath: filebeat.yml - mountPath: /usr/share/filebeat/data name: data - mountPath: /var/lib/docker/containers name: varlibdockercontainers readOnly: true - mountPath: /var/log name: varlog readOnly: true dnsPolicy: ClusterFirstWithHostNet hostNetwork: true restartPolicy: Always schedulerName: default-scheduler securityContext: {}serviceAccount: filebeat serviceAccountName: filebeat terminationGracePeriodSeconds: 30 volumes: - configMap: defaultMode: 384 name: filebeat-config name: config - hostPath: path: /var/lib/docker/containers type: "" name: varlibdockercontainers - hostPath: path: /var/log type: "" name: varlog - hostPath: path: /var/lib/filebeat-data type: DirectoryOrCreate name: data

部署elasticsearch 部署elasticsearch采用helm的方式部署

1 2 helm repo add elastic https ://helm.elastic.co

部署logstash logstash的作用主要是将kafka的日志存到elasticsearch里面。

logstash的启动配置如下,这里是多个kafka主题投递到elasticsearch中,input配置topics_pattern,elasticsearch的索引生成加上kafka的主题名称。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 apiVersion: v1input {bootstrap_servers => "10.0.0.187:9092" codec => json { charset => "UTF-8" }consumer_threads => 1"log-.*" ]elasticsearch {hosts => ["http://elasticsearch-master:9200" ]codec => jsonindex => "%{[@metadata][topic]}-%{+YYYY.MM.dd}"

deployment的yaml文件参考如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 apiVersion : apps/v1 kind : Deployment metadata : name : logstash namespace : log spec : replicas : 1 selector : matchLabels : type : logstash strategy : rollingUpdate : maxSurge : 25% maxUnavailable : 25% type : RollingUpdate template : metadata : labels : srv : srv-logstash type : logstash spec : containers : - command: - logstash - -f - /etc/logstash_c/logstash.conf env : - name: XPACK_MONITORING_ELASTICSEARCH_HOSTS value : http://elasticsearch-master:9200 image : docker.io/kubeimages/logstash:7.9.3 imagePullPolicy : IfNotPresent name : logstash ports : - containerPort: 5044 name : beats protocol : TCP resources : {} terminationMessagePath : /dev/termination-log terminationMessagePolicy : File volumeMounts : - mountPath: /etc/logstash_c/ name : config-volume - mountPath: /usr/share/logstash/config/ name : config-yml-volume - mountPath: /etc/localtime name : timezone dnsPolicy : ClusterFirst restartPolicy : Always schedulerName : default-scheduler securityContext : {} terminationGracePeriodSeconds : 30 volumes : - configMap: defaultMode : 420 items : - key: logstash.conf path : logstash.conf name : logstash-conf name : config-volume - hostPath: path : /etc/localtime type : "" name : timezone - configMap: defaultMode : 420 items : - key: logstash.yml path : logstash.yml name : logstash-yml name : config-yml-volume

部署kibana 部署kibana采用下面yaml部署

configmap配置如下

1 2 3 4 5 6 7 8 9 10 11 12 apiVersion : v1 data : kibana.yml : | elasticsearch.hosts : http://elasticsearch-master:9200 server.host : "0" server.name : kibana kind : ConfigMap metadata : labels : app : kibana name : nwx-kibana namespace : log

Deployment的yaml如下

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 apiVersion: apps/v1 kind: Deployment metadata: labels: app: kibana name: nwx-kibana namespace: log spec: replicas: 1 selector: matchLabels: app: kibana strategy: rollingUpdate: maxSurge: 25 % maxUnavailable: 25 % type: RollingUpdate template: metadata: labels: app: kibana spec: containers: - image: kibana:7.6.2 imagePullPolicy: IfNotPresent name: kibana ports: - containerPort: 5601 name: kibana protocol: TCP resources: limits: cpu: "1" memory: 2Gi requests: cpu: 100m memory: 128Mi securityContext: privileged: false terminationMessagePath: /dev/termination-log terminationMessagePolicy: File volumeMounts: - mountPath: /usr/share/kibana/config/kibana.yml name: kibana subPath: kibana.yml dnsPolicy: ClusterFirst restartPolicy: Always schedulerName: default-scheduler securityContext: {}serviceAccount: default serviceAccountName: default terminationGracePeriodSeconds: 30 volumes: - configMap: defaultMode: 420 name: nwx-kibana name: kibana

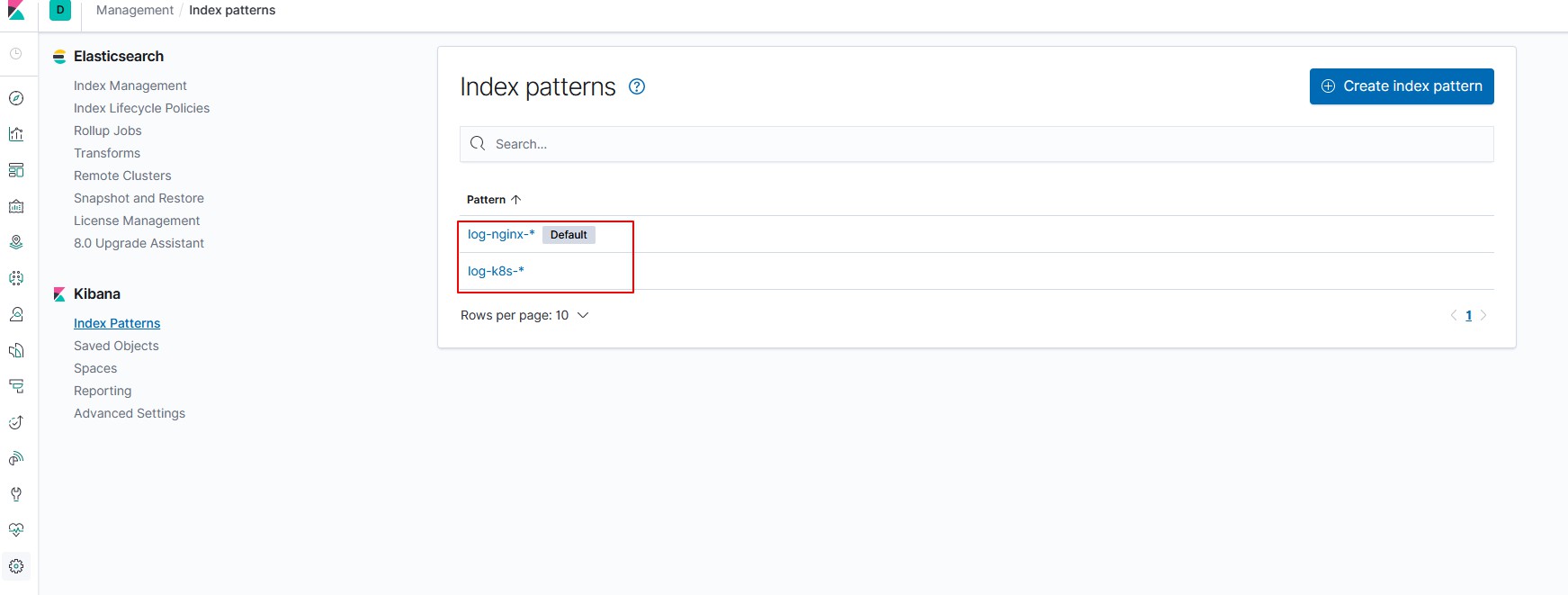

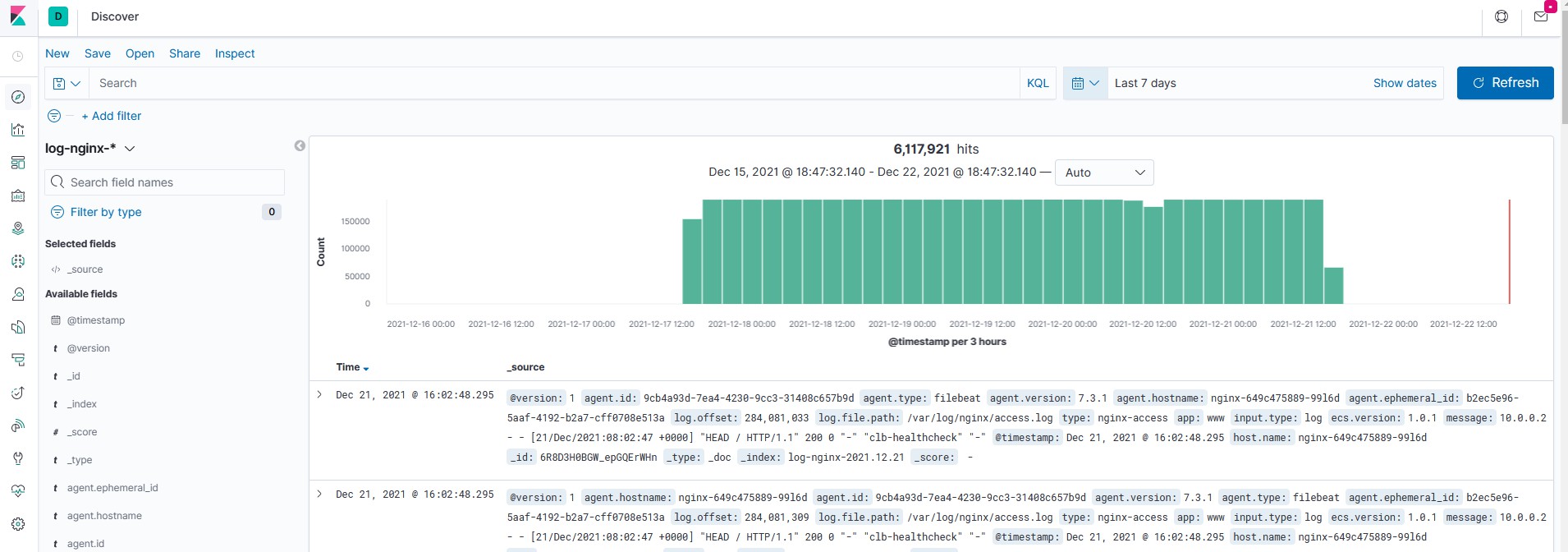

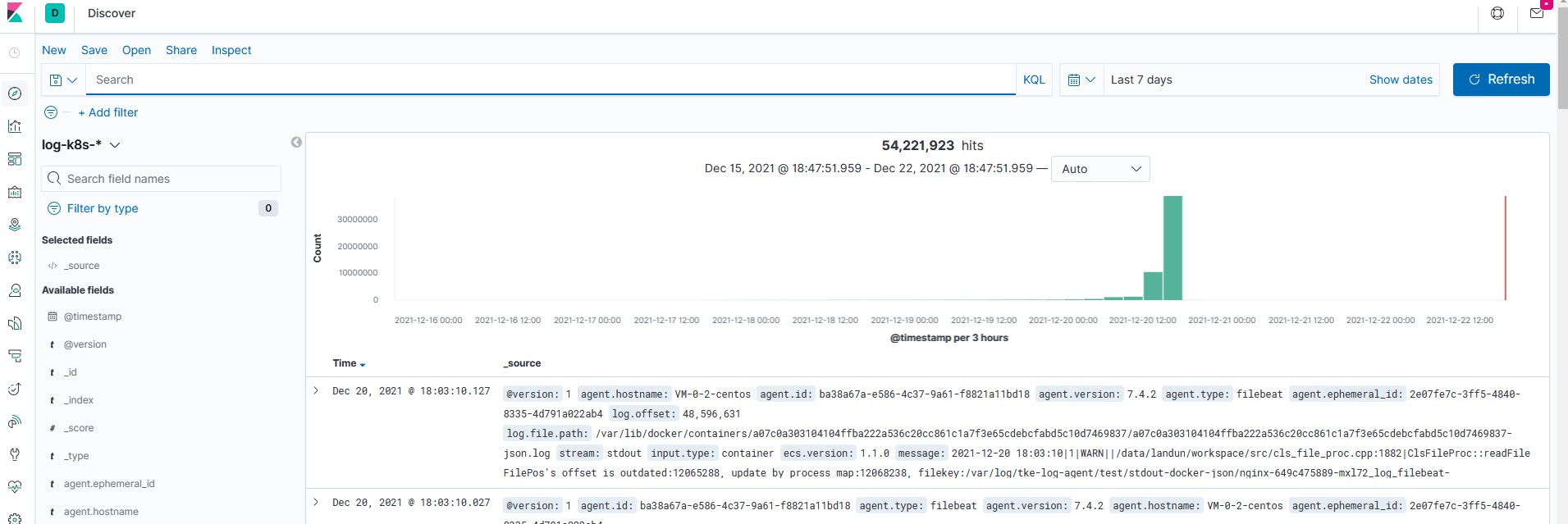

kibana检索日志 创建索引,然后再检索日志,能正常搜到日志则说明采集成功